Azure DevOps (VSTS) - The current operating system is not capable of running this task, with "Azure File Copy"

If you've ever encountered the Azure DevOps/VSTS error "The current operating system is not capable of running this task" when trying to use for example the "Azure File Copy" task, then you can learn about a workaround using the Azure CLI build task here.

During daily operations and work in Azure DevOps, one of the many things I'm doing is building Docker images and doing related tasks. Since I run everything on Linux, and the Hosted Ubuntu linux agent is ridiculously fast, I'm using that for all my tasks in my pipelines.

Unfortunately, if you want to use the Azure File Copy task, you're out of luck. It is not supported on Linux currently (but should be eventually). You might end up with this error:

The current operating system is not capable of running this task. That typically means the task was written for Windows only. For example, written for Windows Desktop PowerShell.

So that's that. Either I go back to a Windows agent for this/these specific tasks, or we figure out how to work around the problem.

Using Azure CLI to replace Azure File Copy on Linux agents

With the Azure CLI we can also copy files easily to blobs, containers, file shares and more. In Azure DevOps/VSTS, there's now an Azure CLI Build Task, that helps us execute any type of script we'd like for the CLI.

In order to replicate what I used to do in the "Azure File Copy" task, I'll take a look at the Azure CLI's az storage file copy command.

The build task also inherits the same service principal you're already using with your tasks, so you can just continue to use your build without additional configuration.

Copy files to Azure using "az storage blob upload-batch" from DevOps

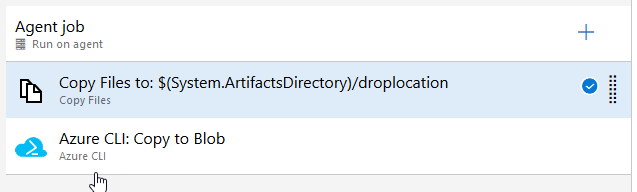

I have two tasks in this specific release step.

- Copy Files: Copies files from my build output/drop to the correct target folder on the build agent.

- Azure CLI: Copies the contents of the folder on my build agent to the Azure Storage Account blob.

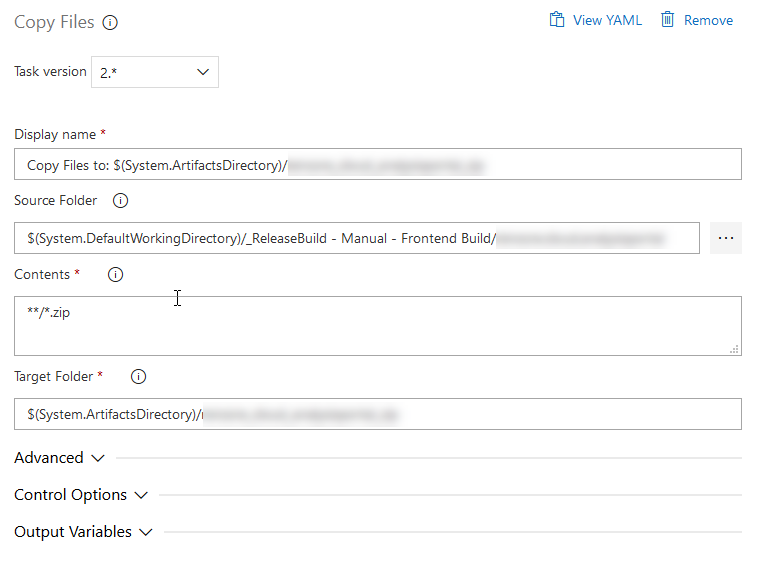

Task - Copy Files

This copies all the .zip files in my given source folder, recursively, and puts them all in the folder I specify in "Target Folder".

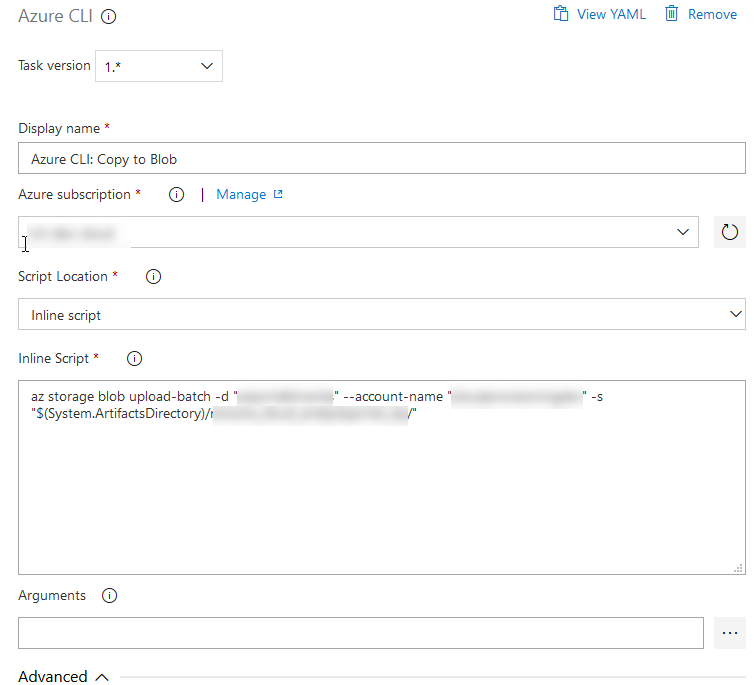

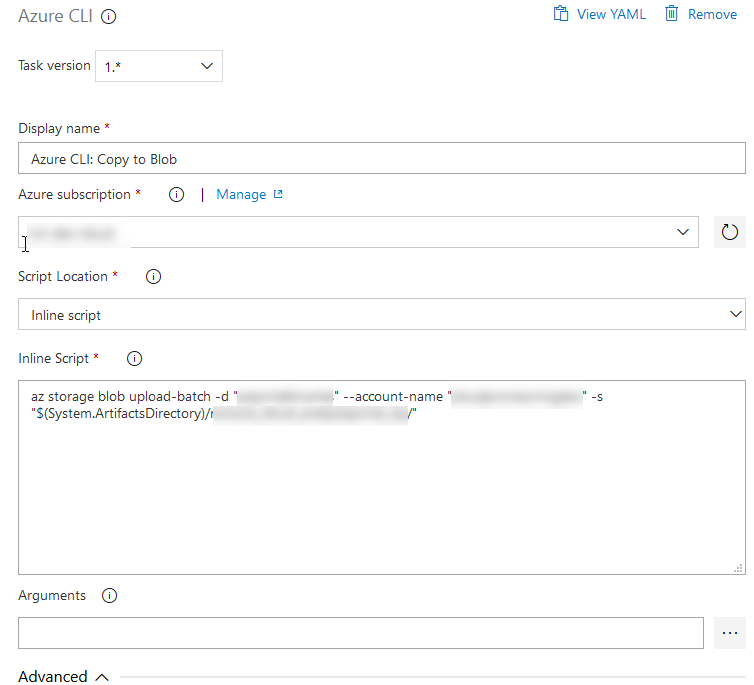

Task - Azure CLI

Using this build task, we can simply enter whatever command we want, including any variables from the build environment. Here's what I'm doing:

az storage blob upload-batch -d "webportalbinaries" --account-name "yourstorageaccount" -s "$(System.ArtifactsDirectory)/yourdropfolder/"

This command will take any file in the drop folder (as specified in the previous build task, as per above) and copy all files to the blob named "webportalbinaries" in the given storage account name.

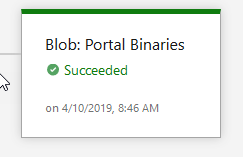

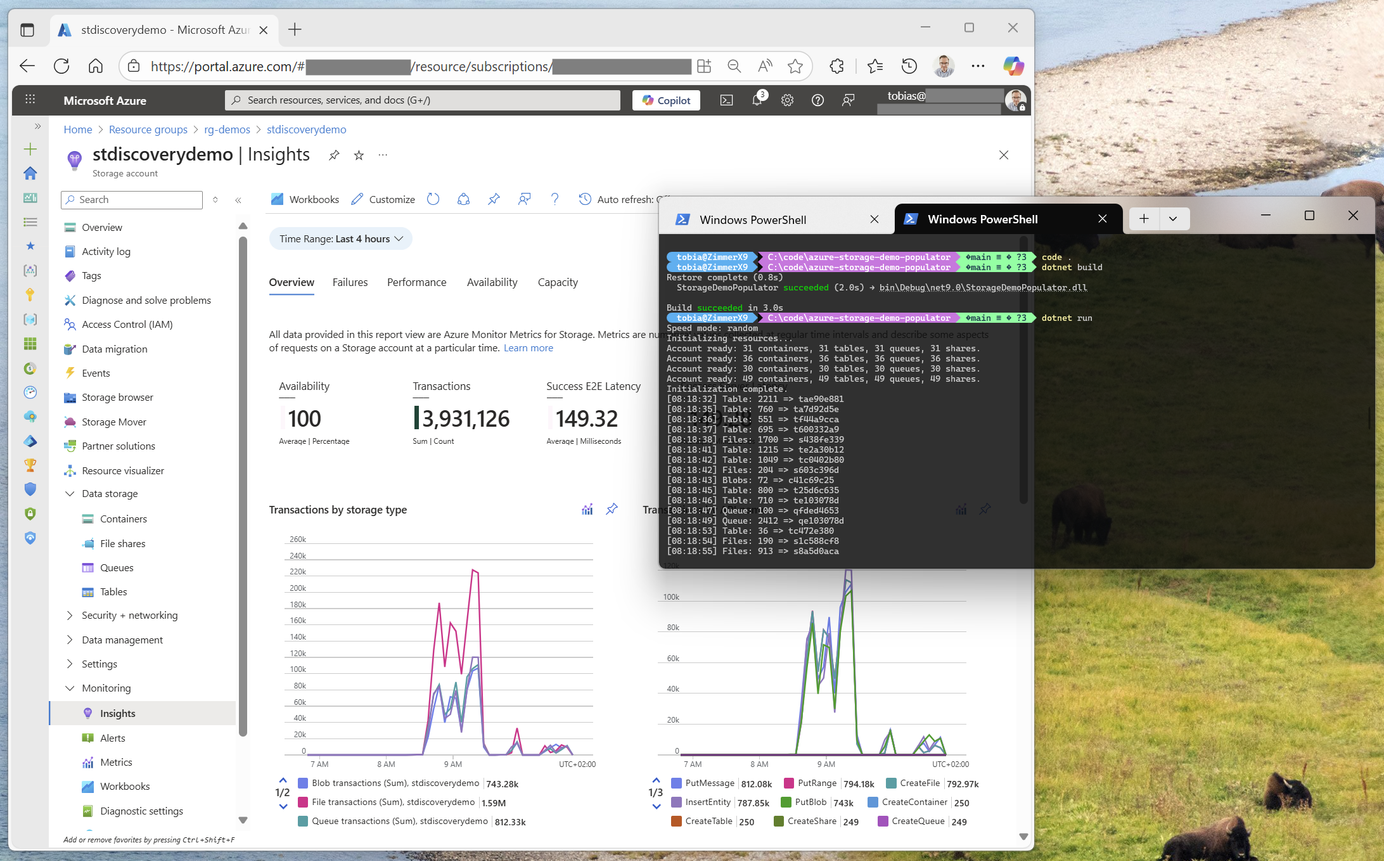

Easy peasy. Azure CLI to the rescue once again. When running the build/release, we can now see that the command has been executed successfully and it indicates success in the logs and the release step is green and awesome:

Logs says we're good:

2019-04-10T06:46:25.2764711Z Setting AZURE_CONFIG_DIR env variable to: /home/vsts/work/_temp/.azclitask

2019-04-10T06:46:25.2772721Z Setting active cloud to: AzureCloud

2019-04-10T06:46:25.2802663Z [command]/usr/bin/az cloud set -n AzureCloud

2019-04-10T06:46:29.3781591Z [command]/usr/bin/az login --service-principal -u *** -p *** --tenant ***

2019-04-10T06:46:32.2613903Z [

2019-04-10T06:46:32.2614525Z {

2019-04-10T06:46:32.2614739Z "cloudName": "AzureCloud",

2019-04-10T06:46:32.2615915Z "id": "YOUR_GUID",

2019-04-10T06:46:32.2616118Z "isDefault": true,

2019-04-10T06:46:32.2616465Z "name": "dev-subscription",

2019-04-10T06:46:32.2616715Z "state": "Enabled",

2019-04-10T06:46:32.2617464Z "tenantId": "***",

2019-04-10T06:46:32.2617688Z "user": {

2019-04-10T06:46:32.2618036Z "name": "***",

2019-04-10T06:46:32.2618202Z "type": "servicePrincipal"

2019-04-10T06:46:32.2618875Z }

2019-04-10T06:46:32.2619041Z }

2019-04-10T06:46:32.2619188Z ]

2019-04-10T06:46:32.2640596Z [command]/usr/bin/az account set --subscription 994440ab-3c19-4892-8746-f69bb2acb578

2019-04-10T06:46:33.0043061Z [command]/bin/bash /home/vsts/work/_temp/azureclitaskscript1554878759628.sh

2019-04-10T06:46:40.5170615Z

2019-04-10T06:46:40.5171604Z 1/1: "20538.zip"[#####################################################] 100.0000%

2019-04-10T06:46:40.5229870Z Finished[#############################################################] 100.0000%

2019-04-10T06:46:40.5230379Z [

2019-04-10T06:46:40.5230560Z {

2019-04-10T06:46:40.5230777Z "Blob": "https://yourstorageaccount.blob.core.windows.net/redacted/20538.zip",

2019-04-10T06:46:40.5231820Z "Last Modified": "2019-04-10T06:46:40+00:00",

2019-04-10T06:46:40.5232082Z "Type": "application/zip",

2019-04-10T06:46:40.5232518Z "eTag": "\"0x8D6BD80496758DC\""

2019-04-10T06:46:40.5232980Z }

2019-04-10T06:46:40.5233466Z ]

2019-04-10T06:46:40.6044463Z [command]/usr/bin/az account clear

2019-04-10T06:46:41.3494187Z ##[section]Finishing: Azure CLI: Copy to Blob

If we use Azure Storage Explorer, we can now clearly see that the file was indeed successfully uploaded to my container. I'm happy, the flow works, and I can now continue to use Linux-only agents for all of my workloads.

Results looks good, and we can see that the commands have uploaded a single file, which in my case is what my source folder contains. My file is called 20538.zip which is the number of my build.

Summary and Links

There we have it. With just a few changes to the previous setup where we used Azure File Copy tasks, we can now utilize the full power of the Azure CLI directly in our DevOps pipelines.

I'm fully embracing the CLI and I use it daily - now I can reuse what I know in the build- and release-pipelines too, and know that it works very well.

Additional reading:

- Azure DevOps: Azure CLI task overview

- GitHub: Microsoft staff mentions they've got cross-platform on the backlog for Azure File Copy commands.

Recent comments