Building custom Data Collectors for Azure Log Analytics in C#

Learn how to send logs to Azure Log Analytics using C# with this simple helper wrapper.

Update July 2020: The wrapper code now exist on NuGet, and you can simply pull the package in to your solution.

https://www.nuget.org/packages/loganalytics.client

In this article, I'm talking about how you can integrate Log Analytics into your applications to send logs to Log Analytics from code - logs that you can then query, render charts on and crunch any way you want.

We'll walk through the following topics:

- 1. What is Azure Log Analytics?

- 2. What is Log Analytics Data Collector API?

- 3. Send custom data collections to Log Analytics using C#

- 4. See the results in Log Analytics

1. What is Azure Log Analytics?

In case you've stumbled on this post without the prior knowledge of what Log Analytics is all about, here's a super-brief version.

Log Analytics in Azure is a part of Azure Monitor. It comes with excellent capabilities for collecting data and telemetry from your logs and gives you extremely sophisticated experiences in querying and working with the data.

"Log data collected by Azure Monitor is stored in Log Analytics which includes a rich query language to quickly retrieve, consolidate, and analyze collected data. You can create and test queries using the Log Analytics page in the Azure portal and then either directly analyze the data using these tools or save queries for use with visualizations or alert rules."

- Microsoft Docs: What is Azure Monitor?

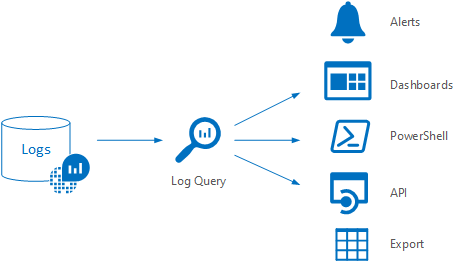

There's a lot of built-in tools, services, and resources in Azure that ties neatly into Azure Monitor, and by extension, the Log Analytics feature set. The logs stored can be accessed in a variety of ways, including Alerts, Azure Portal, PowerShell, API, and more.

Image from Analyze Log Analytics data in Azure Monitor

A short list of benefits I like with Log Analytics are, but are not limited to:

- Powerful log aggregation across Azure resources, and custom log entries

- Easily build charts and visuals over the aggregated data

- Powerful query language to work with the enormous amounts of data you may have, across resources

- Not limited to viewing or querying logs for only a specific set of resources, or one resource in particular - you can query across the board, giving you the possibility to easily aggregate logs across multiple deployments or, in my case, containers.

- Enable easy trend analysis for vast amounts of data

- Custom Logs (This is what we're looking at in this blog post)

Consider Log Analytics to be the tool or service you use when you want to drill down into the data you have. Azure Monitor is better for near real-time data, while Log Analytics is more focused on working with the data, aggregations, complex queries and enabling advanced visuals based on the queries, etc.

1.1. What are Custom logs in Log Analytics?

In Azure Log Analytics, you can digest and work with a lot of data from built-in resources and services in Azure already. However, if you're building custom applications and want complex ways to work with the logs coming out of those applications, then Log Analytics could be something for you.

That's where the custom logs, which we can send using the Data Collector API's, come into play.

The following sections of this article will focus specifically on the Data Collector API and how we, using C# in .NET core, can send custom logs directly to Log Analytics and then finally work with the data we've provided.

If you're building distributed platforms and services, it could be interesting to look at how to use Log Analytics to discover and work with trends over time. When I have multi-million log entries in short periods of time, I need a way to properly query these logs and build an understanding of what's going on - Log Analytics can do precisely this.

2. What is Log Analytics Data Collector API?

Log Analytics provides support to send data through an HTTP Data Collector API, which is REST-based.

This API enables us to send logs to the Log Analytics service from our custom applications, services, workflows, etc. By doing this, we can then query/search, aggregate and build reports based on the data quickly from the built-in features of the Log Analytics dashboards and tools in the Azure Portal - or from code.

2.1. Good to know: Expect some latency for your data to be available

Working with Log Analytics is pretty slick. It can handle immense amounts of data, and it's easy to query the logs once the data is there.

However, a good thing to be aware of is that you shouldn't expect a fully responsive real-time update cadence from when you send your log entry to when it's available for analysis and queries.

- Read more: Data ingestion time in Log Analytics

2.2. Good to know: Valid data types for Log Analytics

In Log Analytics, there's support for a couple of different data types. Data is passed in a JSON.

Valid data types are now listed in the preview documentation here: https://docs.microsoft.com/en-us/azure/log-analytics/log-analytics-data-collector-api#record-type-and-properties

Currently, they are:

stringbooldoubleDateTimeGuid

3. Send custom data collections to Log Analytics using C#

In this section, we'll take a look at how we can practically send the log data from our custom code to the Log Analytics service in Azure.

Microsoft has some excellent documentation for the auth and API parts here: https://docs.microsoft.com/en-us/azure/log-analytics/log-analytics-data-collector-api

In the following section, I'm sharing some helper code to get you started.

3.1. Use the LogAnalytics.Client to send logs easily

I built a simple Log Analytics Client (GitHub) which is a helper for wrapping up the C# logic required for authorization, formatting the requests correctly and make it easy to shoot out many requests through the pipe.

The REST API expects a JSON formatted object or collection of objects to be passed in (the log entries). In my code I'm enabling us to pass whatever entity we want, then serialize it during runtime and pass it on to the wrapper.

I've also added checks for supported data types; If you try to pass an unsupported data type, the wrapper will throw you an exception to let you know where things failed.

It means:

- Authorization is handled. Simply pass your Workspace ID and Shared Key to the constructor.

- Generic <T> class based wrapper, meaning you can build your data types in C# easily and pass them to the wrapper when sending log entries.

- Property validation to ensure the correct type is used for entities passed to logs.

The wrapper uses parts of code from the preview documentation available here: https://docs.microsoft.com/en-us/azure/log-analytics/log-analytics-data-collector-api

Putting that together to a simple helper was easy enough, and the log entries can now flow!

3.2. Send a log entry

Using the wrapper to send a single log entry is simple:

LogAnalyticsWrapper logger = new LogAnalyticsWrapper(

workspaceId: "your workspace id,

sharedKey: "your shared key");

await logger.SendLogEntry(new TestEntity

{

Category = GetCategory(),

TestString = $"String Test",

TestBoolean = true,

TestDateTime = DateTime.UtcNow,

TestDouble = 2.1,

TestGuid = Guid.NewGuid()

}, "demolog")

.ConfigureAwait(false); // Optionally add ConfigureAwait(false) here, depending on your scenario

3.3. Send a collection of log entries

Since Log Analytics can take some time to process the requests, and a request itself both costs consumption and bandwidth, etc. it makes sense to bundle the log requests you send.

A simple example of this using the wrapper looks like this:

LogAnalyticsClient logger = new LogAnalyticsClient(

workspaceId: "LAW ID",

sharedKey: "LAW KEY");

// Example: Wiring up 5000 entities into an "entities" collection.

List<DemoEntity> entities = new List<DemoEntity>();

for (int ii = 0; ii < 5000; ii++)

{

entities.Add(new DemoEntity

{

Criticality = GetCriticality(),

Message = "lorem ipsum dolor sit amet",

SystemSource = GetSystemSource()

});

}

// Send all 5000 log entries at once, in a single request.

await logger.SendLogEntries(entities, "demolog").ConfigureAwait(false);

In this example, we're throwing in thousands of entries with a single REST call using the wrapper. It makes it easy to bundle up and push a chunk of data over, which will then be processed by Log Analytics.

In the test method above, it took about 0.7 seconds to generate and send about 10000 log entities. I'd say that's a pretty good result and a lot quicker than sending them one by one.

3.4. Client source code

The simple LogAnalytics Client project is available on GitHub (https://github.com/Zimmergren/LogAnalytics.Client). Feel free to grab it and do with it as you please.

It may get some improvements down the road, but as of this writing you should consider it as a proof of concept, so if you're looking to use this in production or with sensitive projects, you're recommended to finalize it properly. Why not by submitting a PR to GitHub so everyone can benefit?

Here's the main code; Everything is available in the GitHub project.

using Newtonsoft.Json;

using System;

using System.Collections.Generic;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Reflection;

using System.Security.Cryptography;

using System.Text;

using System.Text.RegularExpressions;

using System.Threading.Tasks;

namespace LogAnalytics.Client

{

public class LogAnalyticsClient : ILogAnalyticsClient

{

private string WorkspaceId { get; }

private string SharedKey { get; }

private string RequestBaseUrl { get; }

// You might want to implement your own disposing patterns, or use a static httpClient instead. Use cases vary depending on how you'd be using the code.

private readonly HttpClient httpClient;

public LogAnalyticsClient(string workspaceId, string sharedKey)

{

if (string.IsNullOrEmpty(workspaceId))

throw new ArgumentNullException(nameof(workspaceId), "workspaceId cannot be null or empty");

if (string.IsNullOrEmpty(sharedKey))

throw new ArgumentNullException(nameof(sharedKey), "sharedKey cannot be null or empty");

WorkspaceId = workspaceId;

SharedKey = sharedKey;

RequestBaseUrl = $"https://{WorkspaceId}.ods.opinsights.azure.com/api/logs?api-version={Consts.ApiVersion}";

httpClient = new HttpClient();

}

public async Task SendLogEntry<T>(T entity, string logType)

{

#region Argument validation

if (entity == null)

throw new NullReferenceException("parameter 'entity' cannot be null");

if (logType.Length > 100)

throw new ArgumentOutOfRangeException(nameof(logType), logType.Length, "The size limit for this parameter is 100 characters.");

if (!IsAlphaOnly(logType))

throw new ArgumentOutOfRangeException(nameof(logType), logType, "Log-Type can only contain alpha characters. It does not support numerics or special characters.");

ValidatePropertyTypes(entity);

#endregion

List<T> list = new List<T> { entity };

await SendLogEntries(list, logType).ConfigureAwait(false);

}

public async Task SendLogEntries<T>(List<T> entities, string logType)

{

#region Argument validation

if (entities == null)

throw new NullReferenceException("parameter 'entities' cannot be null");

if (logType.Length > 100)

throw new ArgumentOutOfRangeException(nameof(logType), logType.Length, "The size limit for this parameter is 100 characters.");

if (!IsAlphaOnly(logType))

throw new ArgumentOutOfRangeException(nameof(logType), logType, "Log-Type can only contain alpha characters. It does not support numerics or special characters.");

foreach (var entity in entities)

ValidatePropertyTypes(entity);

#endregion

var dateTimeNow = DateTime.UtcNow.ToString("r");

var entityAsJson = JsonConvert.SerializeObject(entities);

var authSignature = GetAuthSignature(entityAsJson, dateTimeNow);

httpClient.DefaultRequestHeaders.Clear();

httpClient.DefaultRequestHeaders.Add("Authorization", authSignature);

httpClient.DefaultRequestHeaders.Add("Log-Type", logType);

httpClient.DefaultRequestHeaders.Add("Accept", "application/json");

httpClient.DefaultRequestHeaders.Add("x-ms-date", dateTimeNow);

httpClient.DefaultRequestHeaders.Add("time-generated-field", ""); // if we want to extend this in the future to support custom date fields from the entity etc.

HttpContent httpContent = new StringContent(entityAsJson, Encoding.UTF8);

httpContent.Headers.ContentType = new MediaTypeHeaderValue("application/json");

HttpResponseMessage response = await httpClient.PostAsync(new Uri(RequestBaseUrl), httpContent).ConfigureAwait(false);

HttpContent responseContent = response.Content;

string result = await responseContent.ReadAsStringAsync().ConfigureAwait(false);

// helpful todo: if you want to return the data, this might be a good place to start working with it...

}

#region Helpers

private string GetAuthSignature(string serializedJsonObject, string dateString)

{

string stringToSign = $"POST\n{serializedJsonObject.Length}\napplication/json\nx-ms-date:{dateString}\n/api/logs";

string signedString;

var encoding = new ASCIIEncoding();

var sharedKeyBytes = Convert.FromBase64String(SharedKey);

var stringToSignBytes = encoding.GetBytes(stringToSign);

using (var hmacsha256Encryption = new HMACSHA256(sharedKeyBytes))

{

var hashBytes = hmacsha256Encryption.ComputeHash(stringToSignBytes);

signedString = Convert.ToBase64String(hashBytes);

}

return $"SharedKey {WorkspaceId}:{signedString}";

}

private bool IsAlphaOnly(string str)

{

return Regex.IsMatch(str, @"^[a-zA-Z]+$");

}

private void ValidatePropertyTypes<T>(T entity)

{

// as of 2018-10-30, the allowed property types for log analytics, as defined here (https://docs.microsoft.com/en-us/azure/log-analytics/log-analytics-data-collector-api#record-type-and-properties) are: string, bool, double, datetime, guid.

// anything else will be throwing an exception here.

foreach (PropertyInfo propertyInfo in entity.GetType().GetProperties())

{

if (propertyInfo.PropertyType != typeof(string) &&

propertyInfo.PropertyType != typeof(bool) &&

propertyInfo.PropertyType != typeof(double) &&

propertyInfo.PropertyType != typeof(DateTime) &&

propertyInfo.PropertyType != typeof(Guid))

{

throw new ArgumentOutOfRangeException($"Property '{propertyInfo.Name}' of entity with type '{entity.GetType()}' is not one of the valid properties. Valid properties are String, Boolean, Double, DateTime, Guid.");

}

}

}

#endregion

}

}

4. See the results in Log Analytics

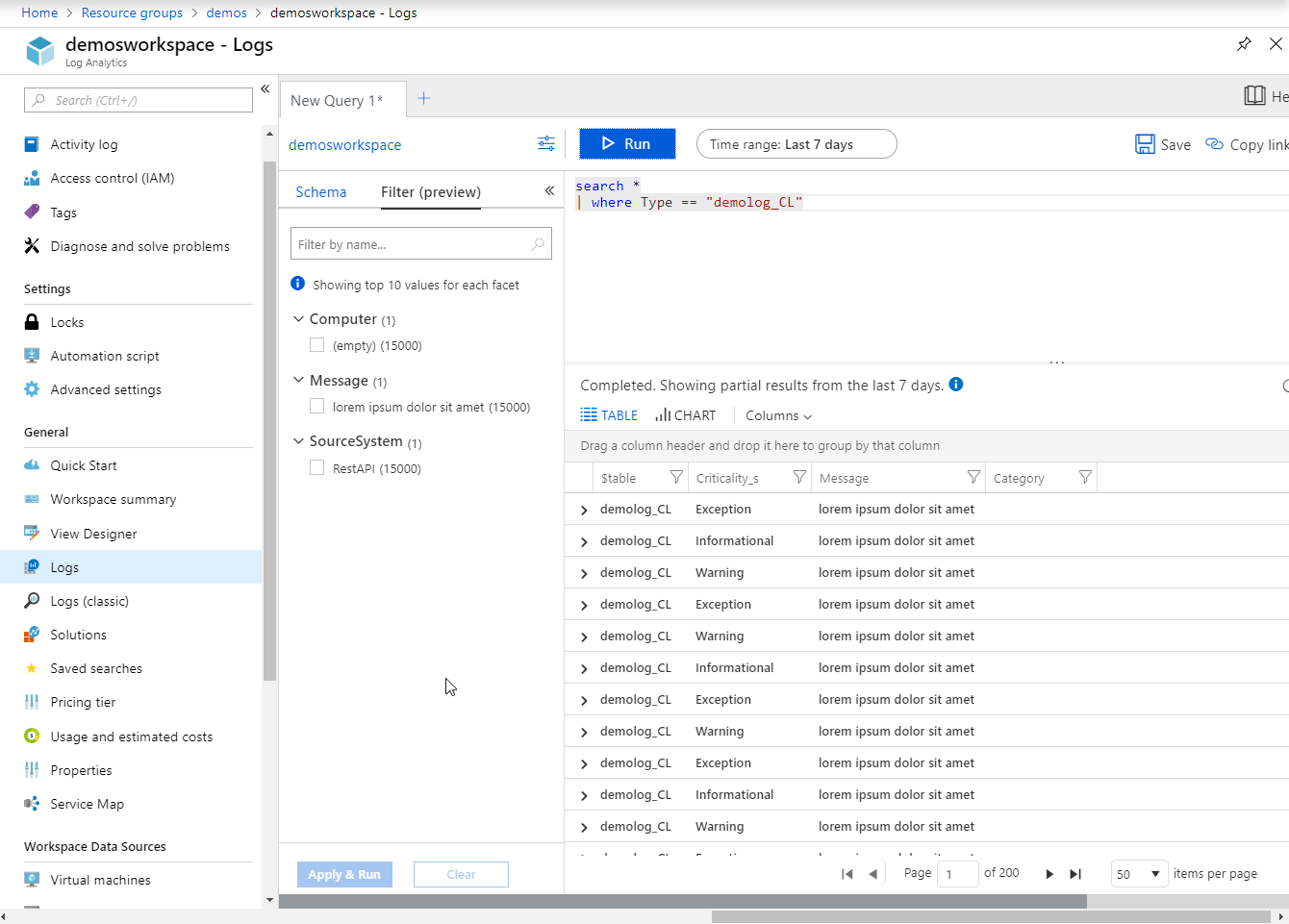

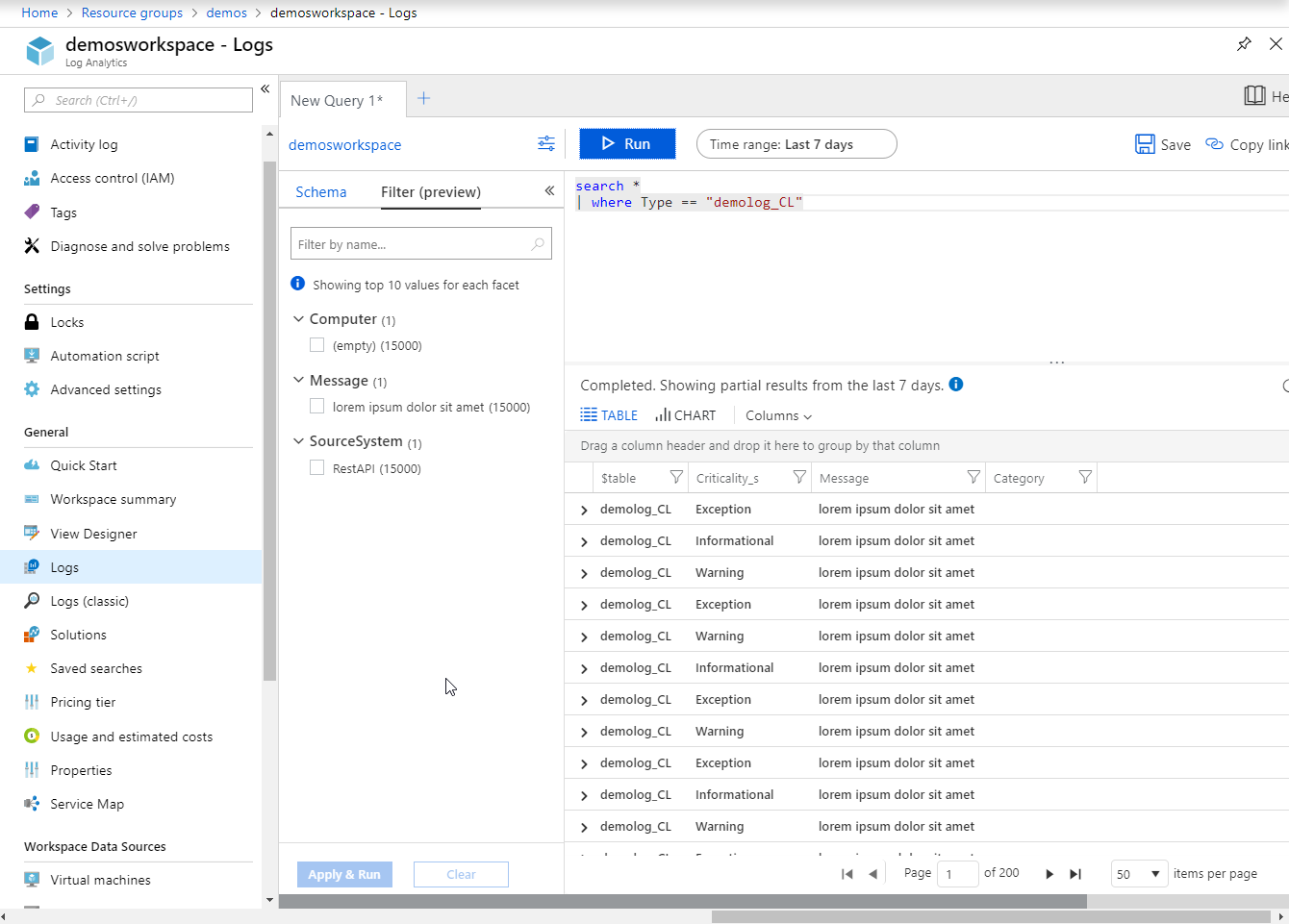

After you've pushed the log entries and waited a couple of minutes for them to appear, you can see them from the Azure Log Analytics dashboards, or if you grab them from custom queries.

I'm using "demolog" as a name for my samples, and in Azure, this will append _CL which means Custom Log. To query the logs for my new data, I can pass this query in:

search *

| where Type == "demolog_CL"

In the Logs I would then see the data as such:

You can now drill down into the data, you can filter and work with it, and you can build visually stunning graphs for quick but powerful insights into what you've got going on in your logs.

Summary & Links

That's a wrap(per) for this time. A simple way to push one or more log entries to Azure Log Analytics using C#.

- Get the package - LogAnalytics.Client (NuGet)

- Get the source code - LogAnalytics.Client (GitHub)

- Read more - Send data to Log Analytics with the HTTP Data Collector API (Microsoft Docs)

Recent comments