Building scalable cloud systems with great performance using Azure Cache for Redis

In this post I'll introduce how to get started with Azure Cache for Redis, as well as some of my thoughts that could be worth digesting, including Security considerations, C# code, running Redis commands, API Management integration , retry policies for transient faults, and more.

When building cloud systems and software, whether publicly or privately available, performance is important.

Favorite things with Azure Cache for Redis:

- Increases performance a LOT, especially if you have some heavy queries.

- In-memory distributed cache, serving data extremely fast.

- Super easy to use.

- No need to manage the server. Fully managed by MS in Azure.

- The more requests you serve from the cache, the more bandwidth and credits you save on underlying systems that you don't need to roundtrip to.

In this post I'll introduce how to get started with Azure Cache for Redis, as well as some of my thoughts that could be worth digesting, including:

- Security considerations.

- Running redis commands.

- API Management and Azure Cache for Redis together.

- Using the Redis server from C#.

- Implementing a retry policy for transient faults.

I brought some of my most exposed endpoints from 450ms on average (measured over ~1000 requests hitting a complex DB query) to 62ms on average (from client to api across internet) served straight from the cache.

Tag along!

Security configuration / considerations

Azure Cache for Redis is using fairly simple authentication options, and due to having a primary goal as a performance monster, complex auth isn't implemented. Hopefully there will be more options in the future, but right now you can use:

- Password authentication.

ALL clients/api's authenticate with the same global key, so if you want more granular and complex auth controls, you have to put that in place youself. In this regard, if you're using API Management, you can set up caching there instead of letting the final endpoint have that responsibility. More on that further down in this article.

Also, there is no support for encryption in the cache, so if you want this - ensure you encrypt it in your code before it goes into the cache.

And another note, there's no support for transport security. If this is on your roadmap, you'll need to SSL tunnel it youself with a proxy.

I'd love to see Managed Identity here at some point.

Firewall is something you can activate for the Redis server, and can deny all requests except from your allowed IP ranges. It's fairly basic, but offers an added security layer.

Executing Redis console commands

With a Redis server you get the capability to run commands to interact with the cache. In Azure Cache for Redis, you can also do this. Here's how.

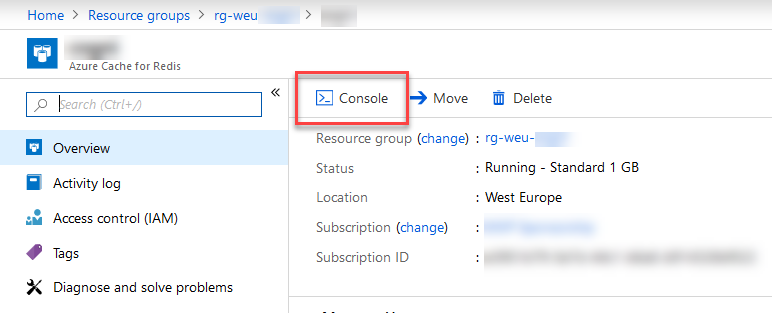

From the Azure Portal, go to your Redis resource and you will see a Console button on the overview page:

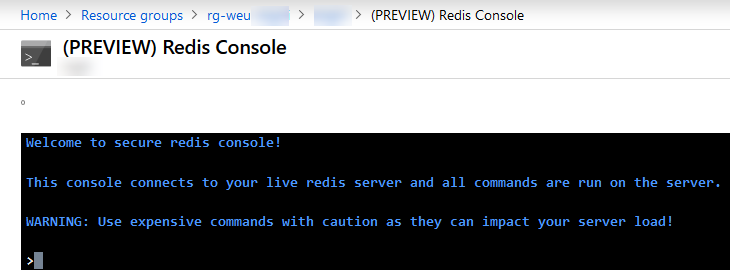

This brings up the console, and you can execute any commands you'd like:

See a list of Redis commands, should you be interested: https://redis.io/commands

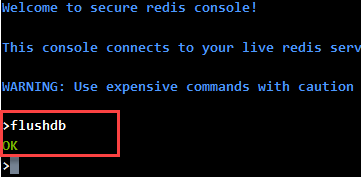

For example, to clear all keys from the current cache, you can use flushdb:

Using Azure Cache for Redis with API Management

I'm using Azure's API Management for some high-throughput API's that I've got running. With API Management, you can also easily connect a cache to increase performance. (Read: Add caching to improve performance in Azure API Management).

Since we're talking about Redis here, let me use that as an example for setting this up. I'll just mention it here, but the link above talks more on how to start utilizing it. It's pretty neat.

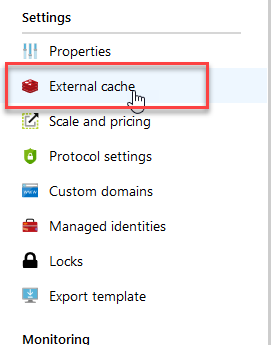

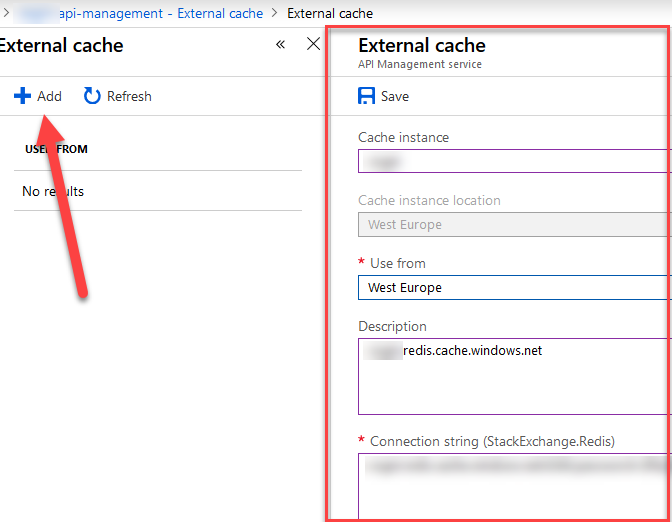

From you API Management resource, go to External cache:

Now, the cache is configured for API Management and you can start designing you API's with the benefits of the Redis cache.

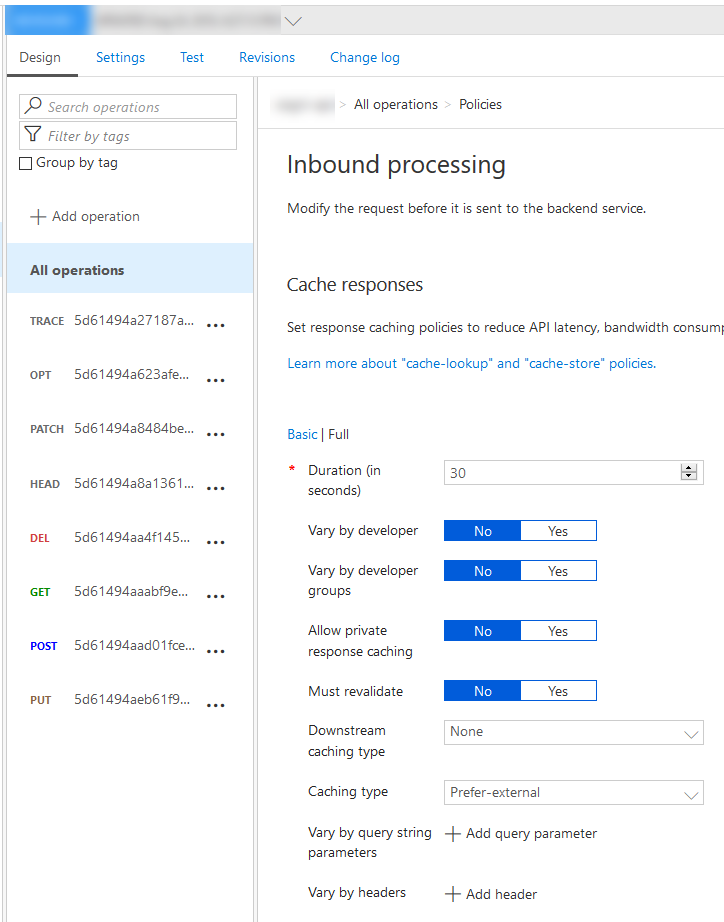

To design with cached responses, you can easily do this from the UI now instead of code:

More on that in the link I mentioned above. We're not here to talk about API Management :)

Working with Azure Cache for Redis programmatically

Using Redis with C# .NET Core

While using Redis programmatically can happen a few different ways, one of the things I regularly do is hook up any of my API's to it where I see fit.

In this example I've got a .NET Core API (C#) and here's what's needed to wire things up.

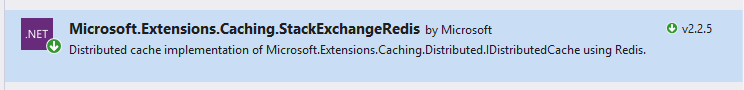

1. Install NuGet package Microsoft.Extensions.Caching.StackExchangeRedis

2. Wire up your service connection to Redis from the Startup.cs file as such:

Using statement:

using StackExchange.Redis;

Code to wire up the connection:

services.AddStackExchangeRedisCache(options =>

{

options.Configuration = "your-connection-string-here";

options.InstanceName = "your-instance-name-here";

});

You'll notice how easy that is - you're done with the connection. Next up is to read/write to the cache.

3. Read and Write to the Redis cache from .NET Core

In my API methods, I have nade decisions where I need caching and what type of expiration I need. These decisions can all be made on how complex (costly) the queries are, and how imortant a low latency and high performance is - combined with how often the endpoint is hit.

For example, some of my endpoints are hit once every hour. Some endpoints are hit more than 50 000 times per day from a plethora of microservies in Functions, API Apps and Azure Container Instances. I dont't need caching for the low-frequency queries, because I know they still perform due to their simplistic nature. However, for the endpoints that are extremely heavily hit, I want to avoid a complete roundtrip to the DB servers only to get the same result set for all requests - so for those cases, I manually define the caching expiration. You can opt-in for whatever defaults you want too, of course.

In this example, its a API Controller I'm implementing.

Using statement:

using Microsoft.Extensions.Caching.Distributed;

I'm using Dependency Injection to utilize the IDistributedCache object (my Redis server):

private INewsService _newsService;

private IDistributedCache _cache;

public NewsController(INewsService newsService, IDistributedCache cache)

{

_newsService = newsService;

_cache = cache;

}

Here you'll see I'm tying in the distributed cache, which is the redis server I hooked up from the Startup.cs file previously.

[HttpGet]

public async Task<IEnumerable<NewsInfoLite>> Get(int count = 10)

{

// unique key for this query

string cacheKey = $"/api/news?{count};";

// get items from cache, if any, and return

List<NewsInfoLite> cachedItems = await _cache.GetAsync<List<NewsInfoLite>>(cacheKey);

if (cachedItems != null)

return cachedItems;

// if cache was empty, we grab new items from the DB

var newsItems = await _newsService.GetLatest(count);

// save the fetched items to the cache with you desired expiration policy

await _cache.SetAsync<List<NewsInfoLite>>(cacheKey, newsItems, new DistributedCacheEntryOptions {SlidingExpiration = TimeSpan.FromMinutes(1) });

// return the data

return cachedItems;

}

I've added comments to describe what's happening.

- Try get from cache

- Return the data if its cached

- If not cached, fetch fresh data

- Save to cache then return

First hit on this endpoint will go to the DB since there's no cached data. If another request hits within a minute, its served straight out of the in-memory distributed Redis cache.

This is blazingly fast. I don't know if blazingly is a word, but if not, it should be. Performance deluxe!

Retry guidance with StackExchange.Redis

A lot of services can be throttled for various reasons, and sometimes there's other factors why a request does't come through like network connection issue at the given point in time, etc. We usually call this transient faults.

Azure Cache for Redis is no exception to this, and as a best practice you should always implement a retry pattern for your requests. Using my configuration code from previous exanple, you'd notice the lines in the middle here:

services.AddStackExchangeRedisCache(options =>

{

options.Configuration = myKeyVaultConfigService.GetByKey("redis-connectionstring").Result;

options.ConfigurationOptions = new ConfigurationOptions()

{

ConnectRetry = 3,

ReconnectRetryPolicy = new LinearRetry(1500)

};

options.InstanceName = "myawesome-api";

});

Specifically the ConnectRetry and ReconnectRetryPolicy properties. I'm defining that I want to retry 4 times, with 3 second intervals.

So if my initial request fails, it will automatically try again for a few times.

Best Practices and Links

There's a plethora of information available online, so instead of re-iterating similar content, here's a list of links if you'd like to dig deeper.

General

- MS Docs: Azure Cache for Redis documentation

- MS Docs: Azure Cache for Redis FAQ

- MS Docs: Task-based Asynchronous pattern

- Redis.io: Redis documentation

- Redis.io: Commands

- GitHub: StackExchange.Redis (what I'm using in this post)

Caching Patterns

- MS Docs on Architecture: Cache-aside pattern

- MS Docs on Architecture: Sharding pattern

Security

- Redis.io: Redis Security

- MS Docs: Redis security

Code

Header image from Unsplash.

Recent comments