Embrace a Secure Software Development Lifecycle (SDLC) for Azure

Table of Contents

While threats are ever-increasing, so are the capabilities, methodologies, and technologies we have at our disposal to mitigate risks at a higher cadence than ever before.

In this article, I'll talk about the responsibility we have as developers, solution architects, DevOps engineers, and anyone else involved in your teams. Security is a team effort, and everyone needs to get on board on what processes and rules to follow. Today we can automate a lot of the things we do for code quality and security, so the road to increasing the security posture doesn't have to be a long one.

Regardless of where you run your code, it needs to be secured by one measure or the other. We hear talks or see threads unfold where the understatement is that the cloud is inherently secure because it has "all kinds of security checks built-in."

Most cloud vendors have clear recommendations that detail how you are responsible for securing your cloud assets with available measures. They manage and provide the resources, with built-in security controls and industry-grade security capabilities.

The choice, however, is up to you. Design your code and cloud infrastructure according to security best practices, which includes measures that may not by default enabled.

Examples of this in Azure are:

- Firewalls and Networking are by default disabled for Storage Accounts, Key Vaults, Azure App Services, and more.

There are many reasons why these things are not decided for you by default. Nobody but you know how your infrastructure should look and work, and the network and traffic flow is something you need to design yourself.

By using ARM templates, or IaaC, or other ways to automate your setups, you can, of course, ensure that you have the security baseline you desire by default when you deploy your resources. However, again this is your responsibility to ensure.

Security in code and cloud

Before we go hands-on, let's take a look from above.

Security breaches

In 2019 alone, there has been an unprecedented amount of known security breaches. Sometimes it's because of bad configuration, sometimes because of harmful or poor code, and sometimes other factors like employees or staff having access to more than they should. There's a wide variety of reasons why there are security breaches, and that's a story that some security experts tell better than me.

For a short brief on security breaches recently, here's some food for thought:

- All Data Breaches in 2019 – An Alarming Timeline (SelfKey)

- Lessons Learned from 7 Big Breaches in 2019 (Dark Reading)

- List of data breaches (Wikipedia)

- Pwned Websites (Have I Been Pwned, HIBP)

- this list goes on endlessly

My inbox gets many security alerts every day about new breaches, malware infections, and other malicious and suspicious behavior from known attacks. I let this be a good reminder not to let go of the security focus. We cannot, with good conscience, compromise security for the sake of convenience.

Third-party software issues

Attacks also happen on third-party components, libraries, and software. Not just big corporations are targets - even the libraries you use every day could be (and have been more than a few times) at risk, and include malware and vulnerabilities.

If you are developing software, whether used internally or for customers, there is a big chance you're relying on third party components from NuGet, npm, and other sources.

How do you know if the source code you blindly pull into your code is reliable and secure? How do you know that it doesn't steal credentials and data?

The shared responsibility model in Azure

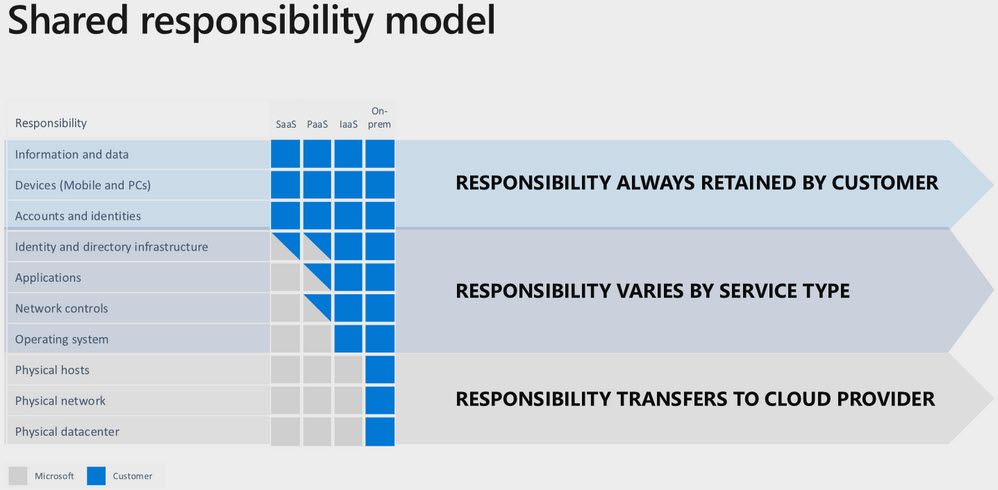

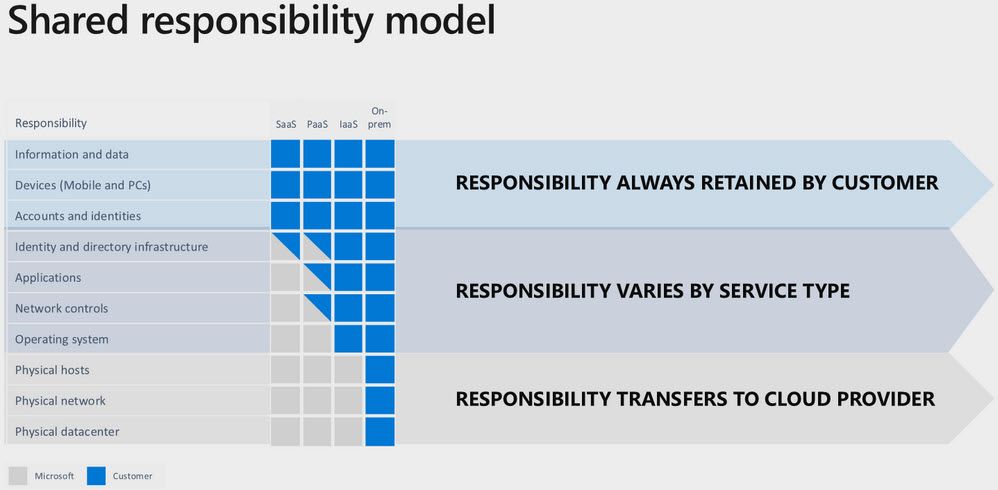

Many cloud providers have something called a Shared Responsibility Model, where they make it clear who's responsible for what type of asset in their cloud offerings.

For Azure, the model paints a picture like this, which is a bit simplified but shows insights into where the responsibility lies:

The main takeaway is that it's always your responsibility to secure:

- Data

- Endpoints

- Account

- Access management

Read more about the model on the Microsoft website.

Security is an organization-wide responsibility

A single individual or team can seldom push through a good security awareness successfully in the organization if leaders are not on board to set a good example.

By training the team, and collaborating on tasks related to security, and embrace the mindset of zero-trust and assume compromise, you can get many things done with fewer hurdles than if you have opposing forces internally.

This story differs if you're a small software vendor, an enterprise organization, or anything in between.

To embrace security in all departments from development to operations, everyone must have an understanding of what the baseline security awareness needs to be. You can add on to your security awareness without compromising productivity, agility, and other factors that are also important for your teams - starting somewhere is better than not starting at all.

Dark Reading has a post with some interesting angles.

What is a Secure Software Development Lifecycle?

SDLC, or a Secure Software Development Lifecycle, help build highly secure software and helps with compliance requirements and can help out in many phases of the development work. If you are in the Microsoft space, they have their own SDL, "Microsoft Security Development Lifecycle", which is very well documented, with great resources.

The key phases of Microsoft's guidelines are:

- Training

- Requirements

- Design

- Implementation

- Verification

- Release

- Response

Microsoft also has a significant number of Practices to follow, described in detail, with many useful links for further reading on the Microsoft SDL site here.

The very least you can do is to pick the most critical practices and start somewhere.

Defining a security baseline for you and your team(s) to follow in code and operations can significantly increase your security posture, with little impact on daily work routines. Again, starting somewhere is more important than not starting at all - I can't stress this enough.

As an example, I've run a couple of security tools on open source projects, and my internal spare-time projects alike. Every single project comes back with recommendations for how to increase my security posture, which should not go unnoticed. I've already run security scans on both the codebases and the configurations in Azure several times. Still, the threat landscape changes rapidly, and with that so does the recommendations from the security tools.

Security is a continuous effort. Since threats are ever-changing, so does the security toolchains we use, and therefore it is essential to run them regularly and as a routine - don't compromise.

Embracing the shift in mindset to DevSecOps

Microsoft has embraced what we now know as DevSecOps, which implies that security is a vital part of DevOps.

Buck Hodges talks about how Microsoft has made its shift to DevSecOps. It's an excellent presentation and much food for thought. He is talking about how Microsoft "attacks" themselves internally with their Red and Blue Teams to figure out any security issues before any potential bad actors do.

He talks about how to shift the conversation from these points:

- How real is the threat?

- Our team is good, right?

- I don't think that's possible.

- We've never been breached.

- Better to ship features, right?

To:

- Where to invest resources and how.

- Don't just prevent a breach. Assume breach. It's about attitude.

- "It's not about IF, but WHEN."

- Security is a continuous effort.

More food for thought:

"Fundamentally, if somebody wants to get in, they're getting in..accept that. What we tell clients is: number one, you're in the fight, whether you thought you were or not. Number two, you almost certainly are penetrated." - Michael Hayden, Former Director of NSA and CIA

Found on: https://docs.microsoft.com/en-us/azure/devops/learn/devops-at-microsoft/security-in-devops

Shifting Left

There's a common phrase used a lot, Shift left. In DevOps and DevSecOps, this essentially means to do things earlier in the lifecycle. Consider your horizontal timeline with your phases, for example:

- Plan - Code - Build - Test - Release - Operate

While a bit simplified, a model with those phases is common. Unfortunately, it's not uncommon that security testing happens in the "test" phase, or later, or not at all. With the Shift Left mindset, we want to do things earlier in the timeline. Practically, this means that we should integrate security tests even before we build an official build that can be tested or released.

Examples of this can be in Azure DevOps as a PR policy. Before a merge succeeds, a set of tools and builds must run successfully - these can include code security tools, code analysis, credential scanning, and more.

The earlier we can detect any issues, the better. Ensuring that we shift the responsibility to the earlier stages of the lifecycle means that we don't have as many builds passed through, only to fail at a later stage.

More reading on shifting left.

- Shift Left Without Fear: The Role of Security in Enabling DevOps (DevOps)

- Shifting Left: DevSecOps as an Approach to Building Secure Applications

Summary

Thanks for reading. This post was a bit less technical but tackling fundamental concepts that we all need to embrace sooner than later.

It doesn't have to be complicated to start implementing proper security routines and guidelines in your team(s) - just being aware of the threat landscape and apply measures to start improving is better than ignoring it.

See additional links below.

General:

- Microsoft Security Engineering: DevSecOps (Microsoft)

- Microsoft Threat Modelling Tool (Microsoft)

Code Security Analysis, Credential Scanning:

Recent comments